Advancements in artificial intelligence have exacerbated the debate surrounding the development of lethal autonomous weapons systems. These killer robots can identify, track, and prosecute targets on their own, without human oversight, and have been used during conflicts in Gaza, Libya, Nagorno-Karabakh, and Ukraine. Critics caution against heightened autonomy in war, citing the potential for abuse that can lead to unintended consequences, including crisis escalation and civilian casualties. Advocates claim the opposite, emphasizing robotic agency in future conflict. They contend that AI-enhanced weapons will encourage human-machine teaming that helps countries maintain lethal overmatch of adversaries while doing so more justly than conventional weapons controlled by humans, particularly because AI is thought to minimize the potential for collateral damage.

Despite rapid advancements in AI and new technologies’ growing proliferation on the battlefield, it is unclear what shapes US service members’ trust in these technologies. Indeed, scholars have yet to systematically explore this topic, which is puzzling for two reasons. First, policymakers, academics, and military leaders agree that service members’ trust is integral for human-machine teaming, wherein they partner with AI-enhanced military technologies to optimize battlefield performance. Service members are responsible for testing, fielding, and managing the use of AI-enhanced military technologies and, notwithstanding the chain of command, there is no guarantee that they will trust AI. Second, research shows that military capabilities can underdeliver given a lack of user trust, creating vulnerabilities they are designed to overcome, such as prolonged approval for targeting that can have implications for war outcomes.

To determine what shapes service members’ trust in human-machine teaming, where they believe that a new AI-enhanced capability will perform as expected, I surveyed senior US miliary officers attending the US Army and Naval War Colleges in Carlisle, Pennsylvania and Newport, Rhode Island, respectively. These are specially selected officers from which the US military will draw its generals and admirals, meaning they will be responsible for adopting emerging capabilities during future conflict. Their attitudes toward human-machine teaming, then, matter.

Contrary to assumptions of service members’ automatic trust for AI, which are reflected in emerging warfighting concepts across the US military, I find that service members can be skeptical of operating alongside AI-enhanced military technologies on the battlefield. Their willingness to partner with these emerging capabilities is shaped by a tightly calibrated set of technical, operational, and oversight considerations. These results provide the first experimental evidence for military attitudes of trust in human-machine teaming and have implications for research, military modernization, and policy.

Three Ingredients of Trust

In 2017, Jacquelyn Schneider and Julia Macdonald published an article in Foreign Affairs entitled, “Why Don’t Troops Trust Drones: The ‘Warm Fuzzy’ Problem.” They found that soldiers’ preferences for drones covaries with operational risk, wherein they trust the use of manned over unmanned aerial vehicles when ground forces are under fire. Though these findings were contested, especially by drone operators themselves, the study benchmarked attitudes of trust among a cross section of the US military. This consisted of those responsible for integrating drones with ground forces, including joint terminal attack controllers and joint fires observers.

We lack comparable evidence relating to service members’ trust in partnering with AI on the battlefield. At most, scholars have studied public attitudes of trust toward AI, such as that used in driverless vehicles, police surveillance, or social media, and recommended further study of preferences among the military. While some scholars have researched Australian and Korean soldiers’ attitudes toward AI, these studies do not specifically address the trust that is integral to human-machine teaming and are more descriptive than experimental. Thus, scholars cannot draw conclusions for the causal relationships between factors of AI performance and soldiers’ trust in partnering with machines during war.

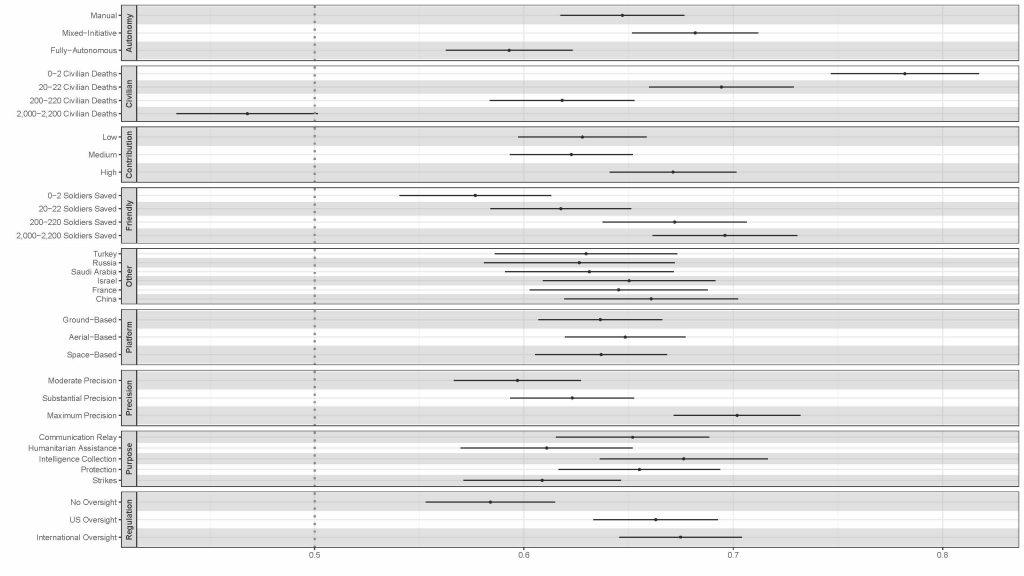

My approach is different. Drawing on extensive research for public attitudes toward drones and AI, I tested how varying nine attributes of AI-enhanced military technologies may shape service members’ willingness to partner with these capabilities on the battlefield. Specifically, I used a survey experiment to vary how these capabilities are used, for what outcomes, and with what oversight. To understand service members’ trust in partnering with AI in light of these considerations, I administered my survey in October 2023 at the war colleges in Carlisle and Newport. Respondents first read a randomized scenario asking them to consider a hypothetical war in which US military forces use a new AI-enhanced military technology with different attributes. I then asked them to gauge their trust in partnering with the technology using a 5-point Likert scale, where 1 corresponds to “strongly distrust” and 5 corresponds to “strongly trust.” I analyzed the data using statistical methods, including by calculating respondents’ marginal mean willingness to trust AI-enhanced military technologies given variation in different attributes (Figure 1).

Of note, my sample is not representative of the US military, nor the US Army and Navy. It is a convenience sample that is helpful to draw extremely rare insights into how service members may trust manned-unmanned teaming. My survey returned nearly one hundred high-quality responses, resulting in over eight hundred unique observations, given my within-subject survey design, and offering strong statistical power. This means that respondents received nine randomized scenarios for AI-enhanced military technologies drawn from a pool of thousands of possible attribute pairings, and then gauged their trust for partnering with machines on the battlefield. This pool of officers also offers a hard test for my expectations. They are older, which suggests they may inherently distrust AI-enhanced capabilities. Thus, while I cannot draw sweeping generalizations from my results, I offer the first-ever account for how US service members trust AI-enhanced military technologies given variation in their use, outcomes, and oversight.

Overall, I find that service members question the merits of human-machine teaming in the context I study, which consists of the hypothetical but realistic possibility of a war between the United States and a near-peer adversary. Service members’ trust in partnering with AI-enhanced military technologies is based on a combination of three overarching factors. These consist of the technical specifications of machines, their perceived effectiveness, and regulatory oversight.

First, I find that service members’ trust for partnering with machines can be heightened when they are used nonlethally, such as for intelligence collection; are not fully autonomous, meaning humans supervise their use; and are highly precise, implying a low false positive rate or minimal target misidentification. Consistent with other respondents, one service member noted that “for strike systems without a human to hold accountable, I want to see precision above 95% accuracy.” Indeed, the probability of service members’ trust in partnering with machines on the battlefield reduces sharply when they are used autonomously for lethal operations, such as strikes. Research shows that this consideration also reduces public support for killer robots more than other factors.

Second, I find that service members trust partnering with machines when they minimize civilian casualties, maximize the protection of friendly forces, and contribute the most to mission success. This result suggests that service members integrate different moral logics when determining their trust for human-machine teaming. One respondent explained that trust was a function of the “ratio of civilian casualties to US forces saved. I distrust fielding any system that can cause 1K-2K civilian casualties to save 0-2 soldiers. However, I am willing to accept civilian casualties if it saves at least as many US soldiers.” Another respondent added that trust was based on the “estimated civilian deaths compared to the estimated military deaths.”

These findings are important because they contradict scholars, including Neil Renic and Elke Schwarz at the University of Copenhagen and Queen Mary University, respectively, who argue that emerging technologies have resulted in riskless or post-heroic war. Instead, service members recognize a greater liability to be harmed during war, even when military action is shaped by AI that is thought to enhance troops’ protection. My findings also contradict a belief among some experts, such as TX Hammes at the US National Defense University and David Deptula at the Mitchell Institute of Aerospace Studies, that such normative concerns are relatively inconsequential for the US military’s adoption of killer robots. My analysis shows that the probability of service members’ trust in human-machine teaming is shaped greatly by moral considerations.

Finally, I find that service members’ also trust partnering with AI-enhanced military technologies when their use aligns with international law. Similar to others, one respondent explained that “though I trust US regulation/oversight, it’s important for international oversight to ensure compliance to international laws rather than domestic law.” This result is consistent regardless of variation in the prospects for mission success and international competition. Even when AI-enhanced military technologies contribute more to the mission, service members demonstrate greater trust in partnering with them when their use comports with international law. A lack of oversight also reduces the probability of service members’ trust in human-machine teaming, even when they are informed that perceived adversaries, such as China or Russia, are also adopting AI-enhanced military technologies.

Trust in AI: Hard to Gain, Easy to Lose

These findings suggest that service members’ trust is complex and multidimensional. Trust can also be complicated by generational differences across the military ranks. In November 2023, I extended my survey experiment to cadets enrolled in the Reserve Officers’ Training Corps across the United States, who are training to become officers. Nearly five hundred cadets completed the survey, resulting in over four thousand unique observations and offering extremely rare insights into how these so-called digital natives perceive human-machine teaming.

I find that cadets trust partnering with AI-enhanced military technologies more so than their future commanders, but not for the reasons scholars typically assume, including misplaced optimism. Crucially, cadets are more willing to partner with AI-enhanced capabilities that are less accurate, implying a greater degree of false positives or target misidentification, particularly when these capabilities contribute the most to mission success.

Together, the results from these related surveys have key research, military modernization, and policy implications. In terms of research, my findings suggest the need for more study, especially in terms of the type of conflict; echelon of use; munition, including nuclear weapons; and target, ranging from personalities to physical structures to networks. As reflected by Erik Lin-Greenberg’s research on the escalatory potential of drones, it is possible that these considerations may also shape service members’ trust in partnering with AI-enhanced capabilities on the battlefield.

In terms of military modernization, my findings suggest that military leaders should further clarify the warfighting concepts encouraging the development of AI-enhanced military technologies; the doctrine guiding their integration, and across what domain, at what echelon, in what formations, and for what purpose(s); and the policies governing their use. Aligning concepts, doctrine, and policies that govern AI with service members’ expectations promises to encourage more trust in human-machine teaming. Finally, if service members’ trust in human-machine teaming is, in part, shaped by international oversight, policymakers should explain how US policies on autonomous weapons systems coincide or diverge from international law as well as the norms conditioning their use.

While military leaders claim that human-machine teaming is necessary for success during future wars and often assume service members will trust partnering with AI-enhanced military technologies, my analysis shows that trust is not a foregone conclusion. Trust, like personal integrity, is hard to gain and easy to lose. Amid dizzying developments of AI across sectors and use cases, including within the military, this first evidence provides a convenient roadmap for US political and miliary leaders to enhance service members’ trust in human-machine teaming. The costs of not heeding these insights for what shapes service members’ trust in human-machine teaming could be the difference between winning or losing in future wars, should analysts’ assessments of the implications of AI on the battlefield prove accurate.

Lieutenant Colonel Paul Lushenko, PhD is an assistant professor at the US Army War College, Council on Foreign Relations term member, and non-resident senior fellow at Cornell University’s Tech Policy Institute.

The views expressed are those of the author and do not reflect the official position of the United States Military Academy, Department of the Army, or Department of Defense.

Image credit: Spc. Jaaron Tolley, US Army